Open-Source Cybersecurity Is a Ticking Time Bomb

In March, a software bug threatened to derail large swaths of the web. XZ utils, an open-source compression tool embedded in myriad software products and operating systems, was found to have been implanted with a backdoor.

The backdoor—a surreptitious entry point into the software—would have allowed a person with the requisite code to hijack the machines running it and issue commands as an administrator. Had the backdoor been widely distributed, it would have been a potential disaster for millions of people.

Luckily, before the malicious update could be pushed out into wider circulation, a software engineer from Microsoft noticed irregularities in the code and reported it. The project was subsequently commandeered by responsible parties and has since been fixed.

While disaster was narrowly averted, the episode has highlighted the ongoing liabilities in the open-source development model that are longstanding and not easily fixed. The XZ episode is far from the first time an open-source bug has threatened to derail large swaths of the web. It certainly won’t be the last. Understanding the vexing cybersecurity dilemmas posed by open-source software requires a tour through its byzantine and not altogether intuitive ecosystem. Here, for the uninitiated, is our attempt to give you that tour.

The Web Runs on FOSS

Today, the vast majority of codebases rely on open-source code. It is estimated that 70 to 90 percent of all software “stacks” are composed of it. In all likelihood, the vast majority of the apps on your phone have been designed with it, and, if you are one of the 2.5 billion people in the world who uses an Android, your device’s operating system is a modified version of the software that originated with the Linux kernel—the largest open source project in the world.

When people talk about software “supply chains”—the digital scaffolding that supports our favorite web products and services—much of that code is made of open-source components. Its ubiquity has led observers to refer to open source as the “critical infrastructure” of the internet—a Protean substance that is both indispensable and incredibly powerful.

Yet, as important as it is, open-source software remains a subject that isn’t widely understood by most people outside of the tech industry. Most people have never even heard of it.

Why Use Open Source?

For the uninitiated, a quick explanation might go something like this: Unlike “closed” or proprietary software, free and open source software, or FOSS, is publicly inspectable and can be used or modified by anyone. Its usage is determined by a variety of licensing agreements and, quite uniquely, the components are often maintained by volunteers—unpaid developers who spend their free time keeping the software up to date and in good working condition.

Open-source projects can start as pretty much anything. Often, they are small projects for digital tinkerers who simply want to build something new. Eventually, some projects get popular, and private companies will begin incorporating them into their commercial codebases. In many cases, when a corporate development team decides to create a new application, they will construct it using a wealth of smaller, already existing software components which are comprised of hundreds or even thousands of lines of code. These days, most of those components come from the open-source community.

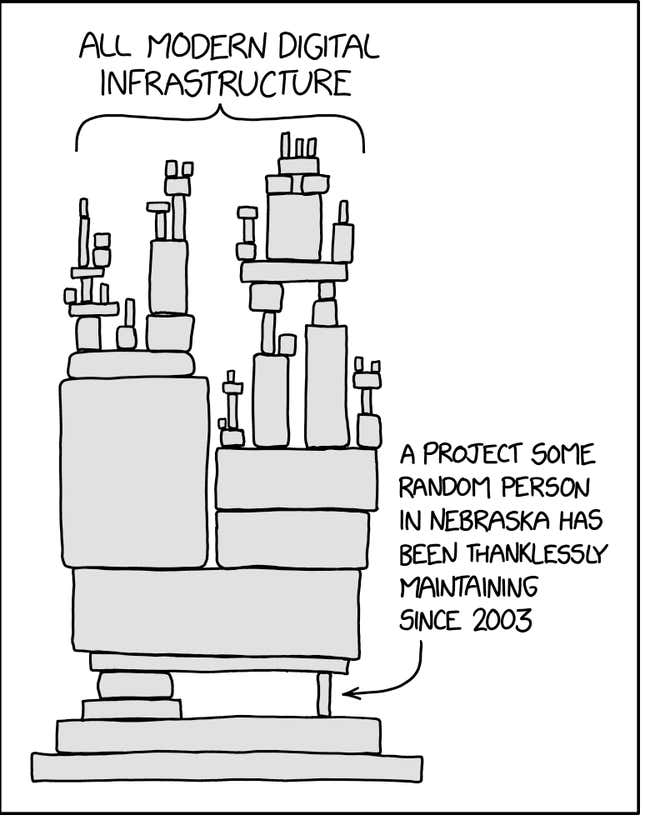

It can be sort of difficult to picture how this odd relationship between commercial software and the open source ecosystem works. Luckily, several years ago the webcomic artist Randall Munroe created what is now a well-known meme that helps visualize this counterintuitive dynamic:

There are many reasons that companies turn to open source for their development needs. Aside from the fact that it’s free, FOSS also allows for software to be created with efficiency and speed. If a programmer doesn’t have to worry about the fundamental building blocks of an application’s code, it frees them up to focus on the software’s more marketable elements. In a competitive environment like Silicon Valley—where a speedy time to market is a critical advantage—open source is pretty much a DevOps imperative.

But with speed and agility comes vulnerability. If FOSS is a ubiquitous element of modern software, there are also structural problems with the ecosystem that put massive amounts of software at risk. Those problems can get pretty hairy pretty quickly—often with disastrous results.

Bugs From Hell

The XZ episode didn’t end in disaster, but it easily could have. One instance where web users weren’t so lucky was the notorious “log4shell” incident. Three years ago, in November 0f 2021, a code vulnerability was discovered in the then-popular open-source program log4j. A logging library, programs like log4j are regularly integrated into apps, where coders use them to record and assess a program’s internal processes. Log4j, which is maintained by the open-source organization Apache, was widely used at the time of the bug’s discovery and was embedded in millions of applications all over the world.

Unfortunately, log4j’s bug—dubbed “log4shell”—was quite bad. Like the XZ bug, it involved remote code execution. This meant that a hacker could quite easily inject their own “arbitrary” code into an impacted program, enabling them to hijack the machine running it. Due to log4j’s popularity, the scope of the bug was massive. Major, multi-billion dollar companies were affected. Hundreds of millions of devices were vulnerable. In the days after the flaw’s disclosure, experts estimated that the vulnerabilities were a ticking time bomb and that cybercriminals were already looking to exploit them.

The discovery of the bug sent corporate America into a full-blown panic and spooked the highest levels of the federal government. Some of the biggest companies in the world were at risk—making it a matter of national security. Several weeks after the bug’s discovery, Anne Neuberger, a top cybersecurity advisor to President Joe Biden, called a White House summit on open source security, inviting executives from Microsoft, Meta, Google, Amazon, IBM, and other big names, as well as influential open source organizations like Apache, the Linux Foundation, and Linux’s Open Source Security Foundation, or OSSF. The meeting was less concerned with how to remedy the hellish vulnerability than with figuring out how to stop this sort of thing from ever happening again.

Not long after the meeting, top executives at the Linux Foundation, including then-general manager of OSSF Brian Behlendorf, began formulating a so-called “mobilization plan” to better secure the entire FOSS ecosystem. The federal government, meanwhile, began developing its own strategies to further regulate the tech industry. Most notably, President Biden’s cybersecurity plan, which was published last year, has sought to prioritize a number of new safeguards to prevent the emergence of new, highly destructive bugs.

Yet as the dangers surrounding the XZ vulnerability show, FOSS is still an environment that, at its highest levels, is vulnerable to bugs that could have catastrophic, system-wide implications for the internet. Understanding the risks in FOSS, however, isn’t easy. It requires a detour into the unique ecosystem that produces so much of the world’s software.

Closed Source Doesn’t Mean More Secure

Before we go any further, it’d be helpful to make one thing clear: Just because a software program is “closed source” or proprietary doesn’t mean it’s more secure. Indeed, security experts and FOSS proponents contend that the opposite is true. We’ll revisit this issue again later but, for the time being, I’ll just direct your attention to a little company called Microsoft. This company, despite being a prominent, closed-source corporate giant, has had its product base hacked countless times—sometimes to disastrous effect. Many companies that keep their products closed have similar track records, and, unlike with open-source software, their security issues are often kept secret, since nobody but the company has access to the code.

The Maintainers

If you want to talk about the security risks in open-source software, you have to start by talking about the people behind the code. In the open-source ecosystem, those people are known as “maintainers” and, as you might expect, they are in charge of maintaining the quality of the software.

Explaining the role of the maintainer is a little complicated. Maintainers might aptly be compared to the construction workers who—in the real world—build our roads and bridges. Or, the engineers who design them. Or both. In short, a maintainer is the caretaker (and often the creator) of a particular open-source project but, in many cases, they work together with “contributors”—users of the software who want to make improvements to the code.

Maintainers host their open-source projects on public repositories, the most popular of which is Github. These repositories include interactive mechanisms that are ultimately controlled by the maintainer. For instance, when a contributor wants to add something to a project, they might submit a “pull request” on GitHub, which includes the new code they hope to add. The maintainer is then tasked with signing off on a “merge,” which will update the project to reflect the contributor’s changes. It’s through this collaborative process that open-source projects continually grow and transform.

As the master controller of these living, iterative projects, the maintainer’s job often requires an immense amount of work—everything from ongoing correspondence with users and contributors, to signing off on code commits, to creating “documentation” guides that show how everything inside the software actually works. Yet, for all of that work, a whole lot of maintainers are not paid particularly well. Most are not paid at all. Open source is supposed to be free, remember? In the world of FOSS, hard work is repaid with little more than the knowledge that your code is being put to good use.

The plight of the maintainer is a peculiar one and is very much tied up with open source’s complicated history, as well as its not altogether straightforward relationship with the corporations that use its code.

A Flash History of FOSS

It’s helpful to consider that, in the beginning, open source didn’t have much to do with corporatism or money. In fact, it was just the opposite.

FOSS grew out of an idealistic hacker movement from the 1980s called the “free software” movement. For all intents and purposes, that movement began with Richard Stallman—an eccentric computer scientist who looks a little like Jerry Garcia and has long espoused a bold form of cybernetic idealism. In 1983, while working at the MIT Artificial Intelligence Lab, Stallman established GNU, a repository of free software. The idea behind the collection was user control. Stallman balked at the idea that private companies could keep software behind a walled garden. He felt that software users needed the ability to control the programs they used—to see how they worked, as well as to change or modify them if they wished. As such, Stallman postulated the idea of “free” software—famously commenting that he meant free “like free speech, not like free beer.” That is to say, Stallman is not against developers getting paid, but their code should be open and visible to all for future improvement.

In 1991, a then-21-year-old Finnish computer programming student named Linus Torvalds spurred the next great innovation in open-source history. Reportedly out of boredom, Torvalds created a new operating system and named it after himself, calling it “Linux.” Pivotally, Torvalds created the Linux “kernel,” the vital component within any operating system that governs the interface between a computer’s hardware and its digital processes. It wasn’t clear at the time, but Linux would go on to become the largest, most popular open-source project in the world. Today, there are hundreds of Linux distributions (or “distros”) that use the kernel that Torvalds created.

In 1998, a small but influential group inside the free software community decided they wanted to break away from the movement’s idealistic roots and take the software mainstream. A summit was held in Mountain View, Calif., where participants sought to discuss how to “re-brand” free software into something “the corporate world would hasten to buy,” writes Eric Raymond, a well-known programmer, and one of the meeting’s attendees. “Open source” was pitched as a “marketing term,” invented with the purpose of capturing the imaginations of America’s tech titans and steering them away from the vaguer, more Communist-adjacent terminology of “free,” Raymond explains. The hope was that businessmen would forget Stallman’s hippy-dippy stuff and buy into the more pragmatic-sounding term.

It turns out that they did buy it. It was the Dot Com bubble, Silicon Valley was booming, and private enterprise was hungry for new ways to unleash profits. To many businesses, open source—which presented a shared pool of free labor and an industrial model for innovation—seemed like a good idea. The “open source” movement thus largely splintered from the “free” movement, becoming its own, corporately-propelled organism, which, in time, took over a greater and greater space inside the software industry. Linux became ubiquitous, Torvalds became famous, and Stallman largely continued to do what he’d always done: advocate for his digital freedoms and disparage the corporate software giants. Today, the world runs on “open source,” though it’s still a term Stallman categorically rejects. He still prefers the term “free” software.

Code for Nothing

Open-source software has become a ubiquitous resource for corporations, but the developers who are responsible for creating and maintaining that vital material haven’t always seen the support—financial or otherwise—that they deserve. Indeed, many companies are often content to grab the code and scat, essentially exploiting the free work without giving back to the projects or their creators.

For the last few years, the company Tidelift has published a survey based on interviews with hundreds of open-source maintainers. Each year, the survey shows pretty much the same thing: Maintainers are overworked, underappreciated, and burned out. More than half of open source maintainers are not paid at all for their work, the survey results have shown. A 2020 Linux Foundation survey of contributors similarly found that more than half of respondents—or approximately 51.65 percent—said they were unpaid.

Maintainer burnout has been blamed for the XZ incident. Indeed, the original maintainer of the software project reported feeling “behind” on it and eventually ceded responsibility to a user named “Jia Tan.” This user ended up being the person who introduced the backdoor into the software component.

There have long been calls for the private sector to do more to support the FOSS ecosystem but, for the most part, those calls have fallen on deaf ears. It’s true that, in recent years, large tech corporations have poured money into certain sectors of the open-source ecosystem—but often only in places where it’s advantageous for them to do so.

For the vast majority of FOSS coders, maintaining projects still comes with little to no compensation, and it’s often less of a fun hobby or a real job than a thankless hustle—think the creator economy with code. On Reddit, you can find thread after thread where developers discuss ways to bootstrap FOSS financing. Some suggest turning to Liberapay, an open-source crowdfunding platform known for doling out money to cash-stressed devs. Others think Patreon is a good option. At least one person encourages people to reach out to Gitcoin, a Web3 startup that uses cryptocurrency grants to sponsor FOSS projects. A lot of developers just incorporate donation portals on their Github project pages—with links to stuff like Stripe, PayPal, or Buy Me a Coffee. As with most creative endeavors, begging for money ends up being the surest way to make a buck.

Heartbleed

You can probably imagine the security issues that can arise from having an immensely popular piece of software maintained via OnlyFans-type contributions. Studies have shown that the vast majority of commercial apps contain open-source components that are no longer updated or have been abandoned by their maintainers.

The dangers inherent in building enterprise digital infrastructure off the backs of a decentralized, sometimes flighty labor pool are readily apparent if you know the story of Heartbleed.

Discovered in 2014, the Heartbleed bug was a critical vulnerability in OpenSSL, an open-source encryption protocol that, at the time, was responsible for powering much of the secure communications programming across the web. Large companies like Google, Facebook, Netflix, and Yahoo used it, as did a vast assortment of other applications and services, from VPNs to instant messaging and email platforms. Naturally, the discovery of the bug, which allowed an attacker to trick vulnerable servers into handing over sensitive data like usernames and passwords, commenced outright panic throughout much of the internet.

“We found out that a thing that everybody used was being supported by just a couple people who weren’t really being paid for it at all,” said Jon Callas, a cryptography expert and software engineer, recalling the chaos that erupted at the time. Callas didn’t work on the OpenSSL team, but he knew the people who did, and he worked on a similar project at the time.

As Callas alludes to, the problem with OpenSSL seemed to inevitably come back to the maintainers. Indeed, it would come out that OpenSSL, responsible for securing privacy and security services for droves of major blue chip companies, was actually maintained by a small, 11-person team, the likes of which included a “core” team of four people and only one full-time employee.

“It is a real problem,” Callas said, of the open source’s maintenance issues. Callas has some experience with this, having been one of the key architects behind OpenPGP, an open standard of PGP encryption used widely throughout the internet. “Figuring out how software packages—which are basically (digital) infrastructure—get supported and maintained is a huge issue.”

Heartbleed exposed a real problem with what had been the operating paradigm for open-source security until that time. For years, the FOSS world was guided by a doctrine that said open-source software was more secure than commercial software. The reasoning goes that FOSS’s transparency, with its code open to the entire web, allowed for greater visibility into its flaws—and thus, greater opportunity to fix those flaws. This is what is known as the “more eyes” argument. So the thinking went, commercial software only had one development team to look out for bugs; open source had the entire internet.

There is an elegant logic to this argument but it also has shortcomings. The “more eyes” argument works in an ideal world—one where FOSS projects get everything they need. Of course, in the real world, open source is only as secure as the resources and people allocated to maintaining it. More often than not, FOSS projects have fewer eyes than they need, not more. Or, maybe they might have the wrong eyes looking at them—like those of a cybercriminal.

It’s undeniable that a certain amount of FOSS projects are incredibly secure. The Linux kernel is said to have been pored over by some 14,000 different contributors since 2005. The Linux Foundation employs around 150 people and brought in an estimated $262.6 million in revenues last year, a majority of which came from corporate and private contributions. In many ways, it’s because of that support and transparency that onlookers were able to catch Jia Tan, the apparent progenitor of the XZ vulnerability. But the problem with using Linux as an exemplar of open-source security is pretty obvious: Most open-source projects are not Linux and they do not get Linux-level support.

The Backstabber’s Knife Collection

When Heartbleed happened, it was considered a “wake-up call” for the software community. The incident fundamentally pivoted corporate America’s attention to the security issues surrounding open source for the first time. It also compelled the Linux Foundation to create the Core Infrastructure Initiative, which sought to identify open-source projects of vital importance that needed additional support (it was replaced by the OSSF in October of 2021).

Yet if Heartbleed was a canary in the digital coal mine, it ultimately wasn’t one that everybody heeded. Indeed, the threat landscape since 2014 has only gotten more complex, as FOSS has become a larger and more integral part of the web. Today, the problems aren’t limited to the occasional catastrophic bug. Indeed, they’re a whole lot more complicated than that.

In our modern world, commercial software is everywhere. Our lives are more digital and interconnected than ever before and pretty much everything you own—from your vacuum to your exercise equipment to your toothbrush—comes with an app. As a result, the opportunity for the software running all of those apps to be compromised has expanded greatly. Today, so-called software “supply chain attacks” are relatively common. Such attacks take aim at particularly weak software components, sometimes allowing cybercriminals to exploit one weak piece to take over or corrupt an entire product or system. More often than not, the components that allow initial access into supply chains are FOSS. There are so many ways to hack open-source components within supply chains that the catalog of vulnerabilities was nicknamed the “backstabber’s knife collection” in one notable article from 2020.

One person who knows this complex threat landscape well is Dan Lorenc. A seasoned security professional with a background in FOSS, Lorenc spent nearly a decade working at Google, and at least three years working cybersecurity detail for Google Cloud. Lorenc now owns the supply chain security business Chainguard, which handles many of the same issues that cropped up during his stint with Google.

“I think open source faces some unique challenges, mostly just because of the decentralized nature (of its development). You can’t necessarily trust everybody writing the code,” said Lorenc. “Anybody on the internet can contribute to open source code but not everybody on the internet is a nice person.”

Yes, the unfortunate truth is that the XZ episode is far from the first time that a FOSS maintainer or contributor has introduced malicious software into a project. A 2020 report found that while most bugs in FOSS are simply coding errors, approximately 17 percent—or about a fifth—were maliciously introduced bugs, or what researchers called “bugdoors.” One notorious example of this occurred in 2018, when the developer of a popular open-source program called event-stream was tired of maintaining the project and decided to cede control to another developer—a pseudonymous web user named “Right9ctrl.” The only problem was that “Right9ctrl” turned out to be a cybercriminal, who subsequently introduced a malicious update into the software. The update enabled the criminal to hack into a certain brand of cryptocurrency wallets and steal their funds. The malicious code, downloaded some 8 million times, went unnoticed for approximately two months.

The trend of FOSS developers sabotaging their own projects has also been trending upward. In October of 2021, the maintainer of a popular set of npm libraries, a man named Marak Squires, inexplicably destroyed them with a series of bizarre updates. The updates caused the software to regurgitate a stream of incoherent gibberish that effectively ruined whatever project was running the software. It is estimated that this act of digital self-immolation led to the destruction of “thousands” of software projects that relied upon coding libraries for success.

Lorenc also says that there are definitely more “log4js” out there—critical projects that just aren’t getting the attention or maintenance they deserve. Actually, this sort of situation pops up “all the time,” he said.

In cases where such projects blow up in corporate users’ faces, the blame often gets placed on the maintainers. People insinuate that “they’re not doing their jobs professionally, (or) not spending enough time on it,” Lorenc said. “But, really, it’s a complicated problem. They (the maintainers) put something out there for free and then people will start building a gigantic piece of critical production infrastructure on top of it and complain later when bugs are found.”

Taking Inventory

So, what to do? How do you regulate a technological space that is—by its very nature—deeply decentralized, plagued by anonymity, and structurally resistant to any meddling by an overarching authority?

That question has been keeping a lot of people up at night. At various times since the log4j debacle, I have reached out to executives at OpenSSF, Linux’s security subsidiary, to discuss progress on its “mobilization plan,” which, if you’ll recall, was put together to create new safeguards for the FOSS environment after the log4shell bug was discovered. When initially proposed, the plan had a lot of moving parts to it and it wasn’t exactly clear which ones would take priority. In 2022, I spoke with the managing director of OpenSSF Brian Behlendorf, who told me that there are at least a couple of proposals within the mobilization plan that are primed for action—ones he called “shovel ready.” One of the most promising solutions is also the most obvious: forcing companies to inventory the code they use.

Weird as it may sound, a lot of companies don’t do that. The OSSF has stated that firms often “have no inventory of the software assets they deploy, and often have no data about the components within the software they have acquired.” Not super appealing, right? It’s a little like a construction company building a skyscraper but having no idea what the foundation is made of. Would you want to live or work in a building like that?

The mobilization plan called for the widespread adoption of third-party code audits, known in the business as a “software bill of materials,” or SBOM. Such tools provide an inventory of a particular piece of software, collected via algorithm. By telling a user what’s inside their own program, SBOMs allow software providers to check whether those individual components are affected by security risks or not.

“The best way to think of it is as an ingredient list on the side of a package of food,” said Tim Mackey, who works with security firm Synopsys, one of several companies that offer SBOM services. “The software bill of materials is all about telling you what’s in there and where it came from.”

SBOMs have been around for years, but they have mostly been used to weed out legal risks. Because FOSS usage is girded upon a convoluted variety of licensing agreements, companies have often used SBOMs to determine a codebase’s contents and, therefore, what legal agreements need to be abided by to avoid getting sued. Now, however, they’re seeing adoption to mitigate an entirely different kind of risk.

In May of 2021, the Biden administration issued an executive order that, among other things, mandated that all software contractors that work with the federal government use SBOMs. Mackey said that, since the order went through, his industry has seen an explosion of interest. “It’s been an incredible boost in business,” he said. “Fantastic growth.”

But even if SBOMs are a step in the right direction, they aren’t ultimately a structural solution to the larger security issues posed by FOSS. In fact, they don’t do anything to mitigate risks that exist in code. “Really, they’re just sort of accurate asset inventory,” said former Google dev Dan Lorenc, noting that it’s “crazy” that a majority of companies don’t already have that. “They (SBOMs) don’t fix the bugs, they don’t prevent bugs, they don’t stop attackers from tampering with stuff. They just give you a good baseline.”

Cory Doctorow, a longtime member of the open-source community, says there are currently no incentives for companies to build secure software. When supply chain attacks happen, open-source maintainers get blamed, but the companies using the code are really the ones at fault. “We are in this zone where, not only do companies not have any affirmative duty to make sure that their software is good and that their maintainers feel supported, but volunteers who line up to warn” those companies and their customers “about defects” can be “silenced by a company if they feel that you’re damaging their public image.” Indeed, Doctorow says that it isn’t uncommon for tech companies to sue security researchers who try to reveal bugs in their products.

The total lack of action by companies leaves much of the hard work of software security up to individual maintainers and open-source organizations like the Linux Foundation. To their credit, those organizations have been working hard to come up with new solutions to the security issues posed by FOSS. In addition to encouraging SBOM adoption, the OpenSSF has pursued a number of other security initiatives over the past several years. Those programs include developing free security applications, like GUAC—a free software-tracking mechanism that allows coders to hunt for problematic components in their code—and Sigstore, a cryptographic signature for verifying the validity of a developer’s software.

If these efforts sound promising, it’s critical to note that they are taking place against the backdrop of rising supply chain attacks, ongoing maintainer burnout, and a general feeling that the security posture of the open source environment has not changed much since the days of log4j. Some have argued that nothing short of a system-wide overhaul will secure the Internet. Matthew Hodgson, the co-founder of the encrypted protocol Matrix, recently argued that FOSS should be a publicly funded service, one that—much like America’s real, physical infrastructure—receives ongoing federal funding and support.

Of course, the likelihood that such a drastic transformation will actually happen seems marginal, which leaves those who maintain the open-source ecosystem with a Sisyphean task. Since last summer, Brian Behlendorf has moved on to another position within the Linux Foundation, passing the security torch to former Google Cloud engineer Omkhar Arasaratnam, who now serves as general manager of OpenSSF. Arasaratnam describes his job as “securing the internet,” a task he admits is “incredibly difficult.” A better descriptor might be “impossible.” Still, he admits that while there are no silver bullets, he can’t help but be hopeful because of what’s at stake. “If we get this right, we help 8 billion people,” he says.