3 Body Problem’s VFX Designer on Creating a Sci-Fi World

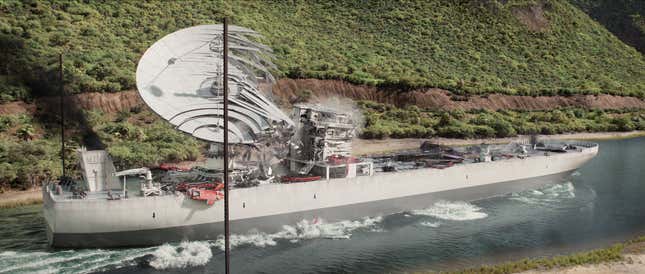

Netflix’s 3 Body Problem won over audiences with its sci-fi mysteries, complex characters, and startling visuals. If you’re still haunted by that Panama Canal scene—in which a ship full of people is sliced into ribbons—or the unpredictable landscapes of the VR game world, here’s a peek behind how they came together.

In this VFX reel provided exclusively to io9 by the Netflix-owned Scanline VFX, you can get a look at the Panama Canal scene, as well as two memorable sequences from the VR world: the dehydration/rehydration of the “Follower” who’s constantly doomed by choices made in the game, and the scene in which the world loses gravity and everyone begins floating into the sky. Check out the video here!

io9 also got a chance to chat over email with one of the two VFX supervisors at Scanline who worked on this project, Boris Schmidt. He, along with Mathew Giampa, reported to 3 Body Problem’s overall VFX supervisor Stefen Fangmeier, and VFX producer Steve Kullback, a reunion for Scanline since they also worked with the same duo on Game of Thrones.

Cheryl Eddy, io9: On a project like 3 Body Problem, how much of what you create is pulled directly from the script, and how much is left open to artistic interpretations?

Boris Schmidt: In a project like 3 Body Problem, a significant portion of what we create is guided by the script, ensuring the core narrative elements remain. However, there is substantial room for artistic interpretation, particularly in areas where visual storytelling and special effects are complex and unusual.

For instance, the development of the look for the various massive landscapes, especially in the millions years time-lapse sequence, relied heavily on art direction and creative vision. These scenes allowed for creativity in visual effects, with the flexibility to capture the otherworldly qualities of the landscapes and VFX.

Similarly, the effects surrounding the rehydration process and the depiction of a crumbling, deep-frozen girl (the “Follower” character) were subject to artistic interpretation. These visual elements needed to evoke emotion and awe, while still adhering to the broader narrative and editorial context. The art direction in these cases required a balance of technical skill and creative imagination, allowing the effects to complement and enhance the storyline without overwhelming it.

io9: What was your starting point when designing the world within the VR game?

Schmidt: When we were designing the world within the VR game, my starting point was a comprehensive understanding of the client’s vision and the project’s core concept. The process involved several key steps:

1. Collaboration with the client:

I began by talking with the client VFX-supervisor Stefen Fangmeier to understand his expectations, storyline, gameplay mechanics, and aesthetic vision. These discussions set the foundation for the entire design process.

2. Gathering concept art and references:

I collected and reviewed any concept art, storyboards, or previs edits provided by the client. This helped me understand the desired visual style, environment layout, and overall tone of the game world. I also gathered reference images from the internet, focusing on elements like architecture, landscapes, textures, and color palettes to broaden the creative scope. In some cases we used Generative AI to gather additional reference.

3. Analyzing provided Unreal Game-Engine previs files:

We analyzed existing game-engine files that were used to create the previs, to gain insights into scene layout and explore various camera angles.

4. Brainstorming and planning:

After collecting all the necessary references and information, I engaged in brainstorming sessions with the various department supervisors and leads. This phase involved sketching initial concepts, outlining the game world layout, and considering how to creatively and technically build these worlds.

5. Building the virtual environment:

Once we had a clear plan, we began building the virtual environments. This involved creating environments, 3D models, texturing, lighting, complex FX setups, crowds, etc. From this point on we continuously refined the design through iterative review meetings and client feedback.

io9: The VR game world is mostly massive landscapes and crowds, but there are also some up-close, intimate moments, including the “dehydrating” and “rehydrating” sequence seen in the reel. How did you approach creating that particular series of effects?

Schmidt: The effect required significant research and development, but after considering various methods, we chose the following approach:

First, we built an internal skeleton geo for the main character so it could act as a collider for the outer skin mesh. This skeleton and the outer skin were both controlled by the same animation rig, so we could pose and animate them using regular animation tools. We used Houdini’s Vellum cloth simulation to flatten the skin and bones, then rolled them up with another rig and cloth simulation. We also rolled up the character rig itself to allow for a coordinated unrolling effect. We controlled the timing of these simulations with 3D gradients and noises to get a more precise artistic direction.

The bones and skin were inflated by a set of 3D gradients, allowing us to adjust the timing for both separately. These gradients also helped us transition between three different surface shaders: one that made the skin look dry and leathery, another that gave it a translucent effect, and a final shader for the human skin. The shader transitions were based on the surface curvature and simulation attributes. We used a stress map that showed where the character’s skin was stretched or compressed to create additional effects. We also used a curvature map to determine concave and convex areas, which helped add fine details and extra texture to the surface.

The hair was simulated separately, with guide splines created in Houdini and then transferred to Maya. We decided to render all shader variations and transitions separate to give full control in compositing. This came at the cost of heavy render-times, including the stunning translucent look, where you can still see the internal skeleton.

Then, we fine-tuned the results by making adjustments and removing any unwanted artifacts using shot-modeling on top of the final Alembic caches. FX provided additional aeration simulations and air bubbles that were emitted from the character. Like always, the final look was dialed by adding compositing love.

io9: The VR world also, at once point, experiences a total loss of gravity. What references did you have for the movement in that scene, which is a blend of terrifying and graceful?

Schmidt: We looked at zero gravity references from the international space-station and footage of reduced-gravity aircraft (nickname vomit comet). Plus additional underwater reference we collected for the rehydration scene which also had elements of floating zero gravity. We closely analyzed how long hairs are moving in space and underwater for the little girl character named “the Follower.” We decided to go with the underwater look, because the space reference felt too stiff and boring compared to the flowing underwater motion.

For characters in the foreground we decided to use fully animated characters, including the horses, in order to have more control and to stay flexible to address notes. Our animation team came up with great ideas for the horse movements in zero gravity, e.g. kicking with the hooves and rotating the head, to show the panic of the animal in this uncommon situation.

For midground and far distant characters we used a mix of animated, motion captured and ragdoll-simulated characters. Our motion capture team did a great job with suspending the performers on wires to help with the antigravity feel. Our FX team simulated all of the non-living assets like the disintegrating towers, floating roof tiles, floating banners and flags, etc. Our FX team had the big challenge to drive the overall motion of these millions of characters and assets with particle and physics simulations.

io9: The Judgment Day sequence is probably the most memorable moment in the entire series—it’s so shocking and makes a huge visual impact. What did you have to take into consideration, to make it feel as realistic as possible?

Schmidt: At this point I want to give special recognition to co-VFX supervisor Mathew Giampa for his outstanding contribution on the tanker sequence. Mathew’s expertise and his creative vision were crucial to achieve such impressive results. The realism of the sequence was achieved through a combination of elements. Here are a few key components that were essential.

First, we needed to construct an entire digital representation of the Panama Canal, focusing on making it appear as lifelike as possible. Our reference was the Culebra Cut section of the canal, known for its distinct terrace features. This involved creating a variety of trees and plants native to this region, effectively building an entire computer-generated photo real environment from the ground up.

We also took on the task of building and helping to design the tanker named Judgment Day. This required us to match the layout of the deck to the practical set, ensuring that every detail was accurately matching. This included various assets, like the basketball court, helicopter, and landing pad, which had to be placed precisely on the deck.

One of the challenging aspects was illustrating the nano filaments, which operate on an atomic scale. The cuts they produce are not visible until the pieces begin to slide. Depicting this effect on both the tanker and the people presented a visual challenge, as there were no real-world examples to draw from. Our FX team had to convincingly portray the scale of these slices and the weight of the individual components and how they physically would interact.

Our look development and lighting teams played a crucial role in tying everything together. They worked to ensure that the lighting and shaders were consistent across the environment, tanker, and heavy FX simulations.

io9: What was the biggest challenge you faced working on that escalation of everything across the ship being sliced to pieces?

Schmidt: Designing complex FX setups for a ship-slicing scene involves orchestrating a symphony of effects that must work in unison. This encompasses multiple physics simulations, including the dramatic split of the ship itself, the swirling movement of canal water, and the eruption of soil on the shore as ship and debris crashe onto land.

Alongside these larger elements are fine dust particles kicked up by the impact, and objects tumbling and colliding on the ship’s deck. There are also the simulated slicing of human figures, small fragments scattering through the air, and waves of water spray hitting the surroundings. Fires break out, smoke billows, sparks fly, and occasional explosions erupt as the ship is torn apart.

Coordinating this complex array of effects requires meticulous attention to detail to ensure the continuity of FX throughout multiple shots and across various departments. Each component must be carefully art-directed so that the sequence maintains a seamless flow from start to finish, with all the different elements perfectly aligned and synchronized. Kudos to our FX team and all the other departments creating this awesome sequence.

io9: Which sequence took the longest or the most work to complete, and why?

Schmidt: Certainly, the Judgment Day sequence required considerable time to produce due to the reasons detailed in the previous answer. Other scenes also demanded extended development periods. For instance, the reverse gravity scene featuring a floating army took quite a while because it involved many unique shot types, such as simulating zero-gravity water. Additionally, there was the challenge of creating the human calculator. The gruesome scene where Turing and Newton are sliced by Khan required precise attention to detail. Likewise, the full CGI time-lapse sequence, complete with animated light setups and clouds, added to the lengthy development process.

You can watch 3 Body Problem on Netflix.

Want more io9 news? Check out when to expect the latest Marvel, Star Wars, and Star Trek releases, what’s next for the DC Universe on film and TV, and everything you need to know about the future of Doctor Who.